Small breakthroughs applied in new ways can re-shape the world. What was true for the allied-powers in 1942 – by then monitoring coded Japanese transmissions in the Pacific theater of World War II – is just as relevant in the Enterprise storage business today. In both cases, more than a decade of incremental improvement and investment went into incubating technologies with the potential to disrupt the status quo. In the case of the allied-powers, the outcome changed the world. In the case of the Enterprise storage business, the potential exists to disrupt the conventional Enterprise SAN business, which has remained surprisingly static for past 10 years.

In large and small enterprises alike, one thing that everyone can agree on is that storage chews through capital. In some years it’s a capacity problem. In other years, it’s a performance problem. And occasionally, when it happens to be neither of those… it’s a vendor holding you hostage with a business model that looks reminiscent mainframe computing. You’re often stuck between developers demanding a bit more of everything, leadership wanting cost reductions, and your operations team struggling with their dual mandate.

Storage, it seems… is a constant problem.

If you roll-up all of your storage challenges, the root of the problem with the Enterprise storage and the SAN market over the better half of the past decade is that storage has all too often looked like a golden hammer. Running out of physical capacity this year? Buy a module that gives you more capacity. A performance issue, you say? Just buy this other expensive module with faster disks. Historically, it’s been uncreative. If that pretty much resembles what you’re assessment of the storage market was the last time you looked, you’d certainly be forgiven for having low expectations.

Fortunately, it looks like we’re finally seeing disruption in Enterprise storage by a number of technologies, and businesses, and this has the potential to change the face of the industry in a ways that just a few years ago wouldn’t have even been envisioned.

Where were we a few years ago?

When you breakdown your average SAN, by and large it consists of commodity hardware. From standard Xeon processors, and run of the mill memory, to the same basic mix of enterprise HDDs… for the most part it’s everything you’d expect to find. Except perhaps for that thin layer of software where the storage vendor’s intellectual property lives. That intellectual property – more specifically, the reliability of that intellectual property is why there are so few major storage vendors. Dell, EMC, NetApp, IBM, and HP are the big players that own the market. They own the market because they’ve produced reliable solutions, and as a result they’re the ones that we trust not to make a mess of things. Beyond that, they also acquired strategic niche players that popped up over the years. In a nutshell, trust and reliability are why enterprise storage costs what it does, and that’s exactly why these companies are able to sustain their high margins… they simply have a wide-moat around their products. Or, at least, they used to. Flashing forward to today, things are finally starting to change.

What’s changed? Flash memory:

For years the only answer to storage has been more spinning magnetic disks (HDD). Fortunately, over the past few years, we’ve had some interesting things happen. First, is the same thing that caused laptop and tablets to outpace desktops: flash memory. Why flash memory? Because it’s fast. Over the past couple of years, SSD drives and flash technology are finally being applied to the Enterprise storage market – a fact which is fundamentally disruptive. As you know, any given SSD outperforms even the fastest HDD by orders of magnitude. The fact that flash memory is fast and the fact that it’s changing the Enterprise storage market might not news to you, but if there was any doubt… here are some of my takeaways concerning SSD Adoption Trends from August 2013, based on the work done by Frank Berry, and Cheryl Parker at IT Brand Pulse.

These changes range from the obvious…

- As SSDs approach HDDs dollar per GB cost, organizations are beginning to replace HDD with SSD

- Quality is the most important HDD feature

- Organizations are mixing disk types in their arrays in order to achieve the best cost for reliability, capacity, and performance required

- More organizations have added SSDs to their storage array in 2013 than 2012

… to the disruptive…

- Within 24 months, the percent of servers accessing some type of SSD is expected double

- SSDs will comprise 3x the total percentage of storage within the next 24 months

- IT is depending increasingly more on their storage vendors (to embed the right underlying NAND flash technology in a manner that balances cost, performance, and reliability)

In other words, we’re finally starting to see some real traction in flash-based memory replacing hard disk drives in the Enterprise tier, and that trend appears to be accelerating.

What’s changed? The Cloud:

It seems that no IT article today is complete without a conversation about the Cloud. While some have been quick to either dismiss or embrace it, the Cloud is already starting to pay dividends. Perhaps the most obvious change is applying the Cloud as a new storage tier. If you can take HDDs, and mix in some sort of Flash memory, and then add the Cloud… you could potentially have the best of all possible worlds. Add in some intellectual property that abstracts out the complexity of dealing with these inherently different subsystems, and you get a mostly traditional-looking SAN that is fast, unlimited, and forever. Or, at least that’s the promise from vendors like Nasuni, and StorSimple (now a Microsoft asset), who have taken HDDs, SSDs, and the Cloud and delivered a fairly traditional SAN-like appliance. However, these vendors have taken the next-step, and inserted themselves between you and the Cloud. Instead of you having to spin-up nodes on AWS, or Azure, vendors like Nasuni have taken that complexity out, and baked-it into their service. On the surface, your ops team can now leverage the Cloud transparently. Meanwhile, Nasuni has successfully inserted themselves as a middleman in a transaction that is on-going and forever. To the extent that’s a good thing, I’ll leave up for debate. But it works quite well and solves most of your storage problems in a convenient package.

The Hidden Cloud?

The storage industry’s first pass at integrating the Cloud has been interesting. If not yet transformative in terms of Enterprise storage, it’s definitely on everyone’s radar. What’s arguably more interesting and relevant and what has the potential to be truly transformative is the trickle down benefits that come from dealing with Big Data. In short, it’s large companies solving their own problems in the Cloud, and enabling their customer base as a byproduct. The Cloud today, much like the Space race of the 1960s, and cryptography advancements of the 1940s, are transformative pressures with the potential to reshape the world.

… and the most disruptive advancements are probably coming in ways you haven’t suspected.

Data durability.

In a similar way that the Japanese assumed that their ciphers were secure in 1942, IT organizations often assume RAID to be the basic building block of Enterprise storage. As in, it goes without saying that your storage array is going to rely on RAID6, or RAID10, or what have you. After all, when was the last time you really gave some thought to the principles behind RAID technologies? RAID relies on classic erasure codes (a type algorithm) for data protection, enabling you to recover from drive failures. But we’re quickly reaching a point where disk-based RAID approaches combined with classic erasure codes, like Reed-Solomon in RAID6 (with can tolerate up to 2 failures), simply aren’t enough to deal with the real-world risk inherent in large datasets with many physical drives. Unsurprisingly, this is a situation that we tend to find in the Cloud. And interestingly, one type of solution to this problem grew out of the space program.

The medium of deep space is often noisy. There’s background radiation, supernovae, solar wind and other phenomena that conspire to damage data packets in transit, rendering them undecipherable by the time they reach their destination. When combined with situations where latency can be measured in hours or days, re-transmission is at best an inconvenience and at worst a show stopper. Consider the Voyager I probe for a moment. Instructing Voyager to re-transmit data, where the round-trip takes about 34 hours and the bandwidth available is on the order of 1.4 kbit/s – extra re-transmissions can frustrate the science team, or could even put the mission at risk. In other cases, where two way communication isn’t possible, or the window for operation is narrow – say, like if you had to ask Huygens to re-transmit from the surface of Titan – in those cases, re-transmission is simply a non-starter. As a result of these and other needs – the need for erasure encoding was obvious.

When erasure encoding is applied to storage, the net of it is that you’re using CPU (math), to create new storage efficiencies (by storing less). In real-word terms, when the challenge is that re-build times for 2TB disks (given a certain I/O profile) are measured in hours or days, they simply aren’t able to deal with failure risk where a second (or third) drive could fail while waiting on the rebuild. What’s the net result? Erasure encoding prevents data loss, and decreases the need for storage; as well it’s supporting components (fewer drives, which translate into less maintenance, lower power requirements, etc.). The need for data durability has spurred the implementation of new erasure codes, targeting multi-level durability requirements, which can reduce storage demands and increase efficiencies.

Two examples of tackling the data durability problem though the use of new erasure codes include Microsoft’s Local Reconstruction Codes (LRC) erasure encoding, which is a component of Storage Spaces in Windows 2012 R2, and Amplidata’s Bitspread technology.

In the case of Microsoft LRC encoding, it can yield 27% more IOPS given the same storage overhead as RAID6, or 11% less storage overhead given the same reconstruction I/O relative to RAID6.

Amplidata’s approach to object-based storage is their Bitspread erasure coding technology, which distributes data redundantly across a large number of drives. Amplidata claims it requires 50% to 70% less storage capacity than with traditional RAID. It works by encoding (as in, mathematically transforming) data (files, pictures, etc.) at storage nodes, using their propriety equations. Based on a user-definable policy, you can lose multiple stores, or nodes and Bitspread can still heal the loss. Amplidata’s intellectual property is such that it can work for small or very large Exabyte-sized data sets. The result in a failure situation is much faster recovery, with fewer total drives and less overhead than RAID6.

The Hidden Cloud: Continued…

When Microsoft was building Azure for their internal services a few years ago, they knew they needed scale-out highly available storage solution. Like the rest of us, they didn’t want to pay a storage tax to one of the big storage vendors for something they clearly had the technical capability to architect in-house. What’s more, for Azure to be a viable competitor to AWS, they were driven to eliminate as much unnecessary cost from their datacenters as possible. The obvious low-hanging fruit in this scenario is storage, in the form of Windows Azure Storage (WAS).

Stepping back, Microsoft implemented the LRC erasure coding component within Storage Spaces, enabling software-defined storage within the context of their Windows server OS, which you can use in your datacenter to create elastic storage pools out of just about any kind of disk – just like Microsoft does in Azure.

One of the most interesting tickle down benefits that I’ve seen from the Cloud, comes in the form of a Microsoft Windows Server 2012 R2 feature known as Storage Spaces. The pitch for storage spaces boils down to this… Storage Spaces is a physically scalable, continuously available storage platform that’s more flexible than traditional NAS/file-sharing, offers similar performance to a traditional SAN, and does so at a commodity-like cost point. In other words, Storage Spaces is Cloud storage for the Enterprise without the storage tax.

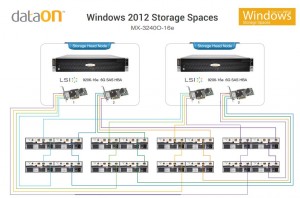

The obvious question becomes, “So how is Storage Spaces as an implementation, any different than the intellectual property of the traditional SAN vendors? Isn’t Microsoft just swapping out the vendor’s intellectual property, for Microsoft Storage Spaces technology? Yes, that’s exactly what happening here. The difference being that you’re now just paying for the OS license plus commodity hardware, instead of paying for the privilege of buying hardware from a single storage vendor as well as their intellectual property. In the process, you’re effectively eliminating much of storage tax premium. You still need to buy storage enclosures, but instead of vendor-locked-in arrays, they’re JBOD arrays, like one of many offered by DataON Storage, as well as other Storage Spaces-certified enclosures.

The net result is dramatically lower hardware costs, which Microsoft calculates based on their Azure expenses to be on the order of 50% lower. As another datapoint, CCO was able to avoid purchasing a new $250,000 SAN and instead acquire a $50,000 JBOD/Storage Spaces solution. Now granted, that’s a Microsoft-provided case study, so your mileage may vary. But the promise is to dramatically cut storage costs. In a sense, Storage Spaces resembles a roll-your-own SAN type of approach, where you can build out your Storage Spaces-based solution, using your existing Microsoft infrastructure skill-sets, to deliver a scale-out continuously available storage platform, with auto-tiering that can service your VMs, databases, and your file shares. Also, keep in mind that Storage Spaces isn’t limited to Hyper-V VMs, as Storage Spaces can export NFS mount points, which your ESXi, Xen, etc. hosts use.

The Real World:

What attributes are desirable in an Enterprise storage solution today?

- Never Fails. Never Crashes. Never have to worry.

- Intelligently moves data around to the most appropriate container (RAM, Flash-memory, Disk)

- Reduces the need for storage silos that crop-up in enterprises (auto-tiers based on demand)

- Reduces the volume of hardware, by applying new erasure encoding implementations

- Inherits the elastic and unlimited properties of the Cloud (while masking the undesirable aspects)

- Requires less labor for management

- Provides automated disaster recovery capabilities

As IT decision makers, we want something that eliminates the storage tax, and drives competition among the big players, and most importantly is reliable. While flash-memory, the Cloud, and new technologies are all driving the evolution of the Enterprise Storage, it’s the trickle-down benefits that come in the form of emerging technologies, that are the often times the most relevant.

While the U.S. was monitoring the coded Japanese transmissions int he pacific they picked-up on a target known as “objective AF”. Commander Joseph J. Rochefort and his team sent a message via secure undersea cable, instructing the U.S. base at Midway to radio an uncoded message stating that their water purification system had broken down and that they were in need of fresh water. Shortly after planting this disinformation, the US team received and deciphered a Japanese coded message which read, “AF was short on water”. The Japanese, still relying on a broken cipher, not only revealed that AF was Midway, but they went on to transmit the entire battle plan along along with planned attack dates. With the strategy exposed, U.S. Admiral Nimitz entered the battle with a complete picture of the Japanese strength. The outcome of the battle was a clear victory for the United States, and more importantly the Battle of Midway marked the turning point in the Pacific.

Outcomes can turn on a dime. The Allied Powers code-breaking capabilities in both the European and Pacific theaters are credited with playing pivotal roles in the outcome of World War II. In business, as in war, breakthrough and disruptive technologies are often credited with changing the face of the world. Such breakthroughs are often hard-fought, and the result of significant investment of time and resources with unknown, and often times surprising outcomes. Enterprise storage pales when viewed in comparison with the need to protect lives (perhaps, the ultimate incubator). But nevertheless, the Cloud is incubating new technologies and approaches to problems previously thought of as solved – like Data durability, and has the potential to fundamentally change the storage landscape.

With this article, I tried to step back and look at the industry at high-level and see where the opportunities for disruption are, where we’re headed, and the kind of challenges that a sysadmin who is taking a fresh look at Enterprise storage might be faced with today. As part of my option analysis effort, I got a bit deeper with Nasuni and Microsoft Storage Spaces, and complied that information, and some “getting started” pricing in the 4800+ word Enterprise Storage Guide. So if you sign-up for my Newsletter here, you’ll get the Enterprise Storage guide as free download (PDF format) in the welcome email. No spam, ever. I’ll just send you the occasional newsletter with content similar to this article, or the guide. And of course, you can unsubscribe at any time.

0 comments… add one